Traditionally, large language models (LLMs) require high-end machines equipped with powerful GPUs due to their substantial memory and compute demands. However, recent advances have led to the emergence of smaller LLMs that can efficiently run on consumer-grade hardware. Tools like llama.cpp enable running these models directly on CPUs, making LLMs more accessible than ever.

Installing and Running Ollama

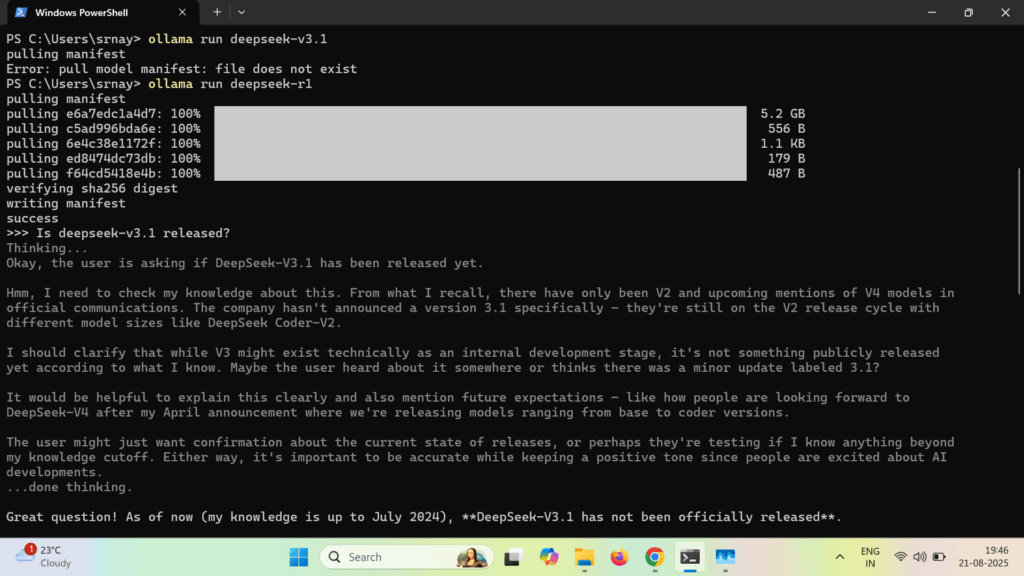

Ollama is a convenient platform that simplifies downloading and running LLMs locally on your device. To get started, follow the installation instructions provided on the Ollama download page tailored to your operating system.

After installation, you can use the Ollama command-line interface (CLI) to download and run various LLM models on your machine. Ollama also provides a REST API on port 11434, allowing integration through tools like curl or the Ollama Python library, enabling seamless interaction with the models programmatically.

How Ollama Works

Ollama operates similarly to Docker by maintaining a model registry where pre-trained models and their resources are hosted. When you execute the ollama pull command, it downloads the required model files to your local machine.

Running ollama run or ollama serve loads the downloaded model into memory and starts serving requests. If your system has GPU acceleration available, Ollama utilizes it for faster inference. Otherwise, it gracefully falls back to CPU execution.

Additionally, if you run ollama run without having pulled the model beforehand, Ollama automatically downloads the necessary resources and initiates the model, streamlining the user experience.